ABSTRACT

This article is about Python and Data Science rich libraries.

How to use Python and Data Science libraries? Who are beginners and intermediate learners can get benefitted with helpful examples and exercises, in this article.

As Python has very rich libraries, which is used broad ways in Data Science industry. Moreover, all of the libraries are open sourced.

I wanted to outline some of its most useful libraries for data scientists and engineers, based on my recent experience.

Core Libraries.

1. NumPy

NumPy is the fundamental package for scientific computing with Python. It mostly used for solving matrix problems. The most fundamental package, around which the scientific computation stack built, is NumPy (stands for Numerical Python). It provides an abundance of useful features for operations on n-arrays and matrices in Python. The library provides vectorization of mathematical operations on the NumPy array type, which ameliorates performance and accordingly speeds up the execution.

Creating a Numpy Array:

import numpy as np

>>> arr = np.array([])

>>> type(arr)

Numpy.ndarray

Creating One Dimensional Array:

>> one_d_array = np.array([1, 2, 3, 4, 5])

# ndim attributes shows the number of dimensions of an array

>>> one_d_array.ndim

1

# size attributes returns the size/length of the array

>>> one_d_array.size

5

Creating a Sequence of Number:

# if a single parameter was passed then the sequence was start from 0.

>>> print(np.arange(10))

[0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

# first parameter denotes the starting point

# second parameter denotes the ending point

# if the third parameter was not specified then 1 is used as default step

>>> print(np.arange(1, 10))

[1, 2, 3, 4, 5, 6, 7, 8, 9]

>> print(np.arange(1, 10, 2)) # here 2 is for the steps

[1 3 5 7 9]

Reshaping an Array:

>>> np.arange(10).reshape(2, 5) # 1d array reshaped into 2d.

array([[0, 1, 2, 3, 4],

[5, 6, 7, 8, 9]])

# flatten an array

>>> np.arange(10).reshape(2, 5).ravel()

array([0, 1, 2, 3, 4, 5, 6, 7, 8, 9])

# transpose an array

>>> np.arange(10).reshape(2, 5).T

array([[0, 5],

[1, 6],

[2, 7],

[3, 8],

[4, 9]])

> np.arange(10).reshape(2, 5).T.shape

(5, 2)

2. Pandas

Pandas is an open-source, BSD-licensed Python library providing high-performance, easy-to-use data structures and data analysis tools for the Python programming language. Pandas is a Python package designed to do work with “labeled” and “relational” data simple and intuitive. Pandas is a perfect tool for data wrangling. It is designed for quick and easy data manipulation, aggregation, and visualization.

Install Pandas-

pip install pandas

There are two main data structures in the library:

“Series” — one-dimensional

A series can be created using various inputs like −

● Array

● Dict

● Scalar value or constant

Create a Series from ndarray

#import the pandas library and aliasing as pd

import pandas as pd

import numpy as np

data = np.array([‘a’,’b’,’c’,’d’])

s = pd.Series(data)

Its output is as follows −

0 a

1 b

2 c

3 d

dtype: object

“Data Frames”, two-dimensional

For example, when you want to receive a new Dataframe from these two types of structures, as a result you will receive such DF by appending a single row to a DataFrame by passing a Series:

Here is just a small list of things that you can do with Pandas:

● Easily delete and add columns from DataFrame

● Convert data structures to DataFrame objects

● Handle missing data, represents as NaNs

● Powerful grouping by functionality

3. SciPy

SciPy contains modules for linear algebra, optimization, integration, and statistics. The main functionality of the SciPy library is built upon NumPy, and its arrays thus make substantial use of NumPy. It provides efficient numerical routines as numerical integration, optimization, and many others via its specific submodules. The functions in all submodules of SciPy are well documented — another coin in its pot.

SciPy contains modules for linear algebra, optimization, integration, and statistics. The main functionality of the SciPy library is built upon NumPy, and its arrays thus make substantial use of NumPy. It provides efficient numerical routines as numerical integration, optimization, and many others via its specific submodules. The functions in all submodules of SciPy are well documented — another coin in its pot.

The special sub-package of SciPy has definitions of numerous functions of mathematical physics. The functions available include airy, Bessel, beta, elliptical, gamma, hypergeometric, Kelvin, Mathieu, parabolic cylinder, spheroidal wave and Struve. Let’s have a look at the Bessel function.

Visualization

4. Matplotlib

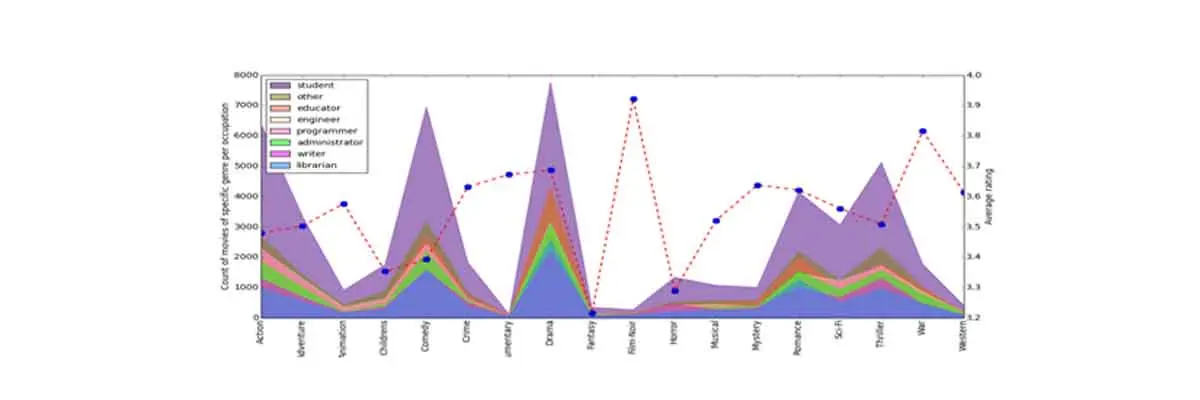

Another SciPy Stack core package and another Python Library that is tailored for the generation of simple and powerful visualizations with ease is Matplotlib. It is a top-notch piece of software which is making Python (with some help of NumPy, SciPy, and Pandas) a cognizant competitor to such scientific tools as MatLab or Mathematica.

Another SciPy Stack core package and another Python Library that is tailored for the generation of simple and powerful visualizations with ease is Matplotlib. It is a top-notch piece of software which is making Python (with some help of NumPy, SciPy, and Pandas) a cognizant competitor to such scientific tools as MatLab or Mathematica.

However, the library is pretty low-level, meaning that you will need to write more code to reach the advanced levels of visualizations and you will generally put more effort, than if using more high-level tools, but the overall effort is worth a shot.

With a bit of effort, you can make just about any visualizations:

● Line plots;

● Scatter plots;

● Bar charts and Histograms;

● Pie charts;

● Stem plots;

● Contour plots;

● Quiver plots;

● Spectrograms.

There are also facilities for creating labels, grids, legends, and many other formatting entities with Matplotlib. Basically, everything is customizable.

The library is supported by different platforms and makes use of different GUI kits for the depiction of resulting visualizations. Varying IDEs (like IPython) support functionality of Matplotlib.

There are also some additional libraries that can make visualization even easier.

Simply, the package is imported into the Python script by adding the following statement −

from matplotlib import pyplot as plt

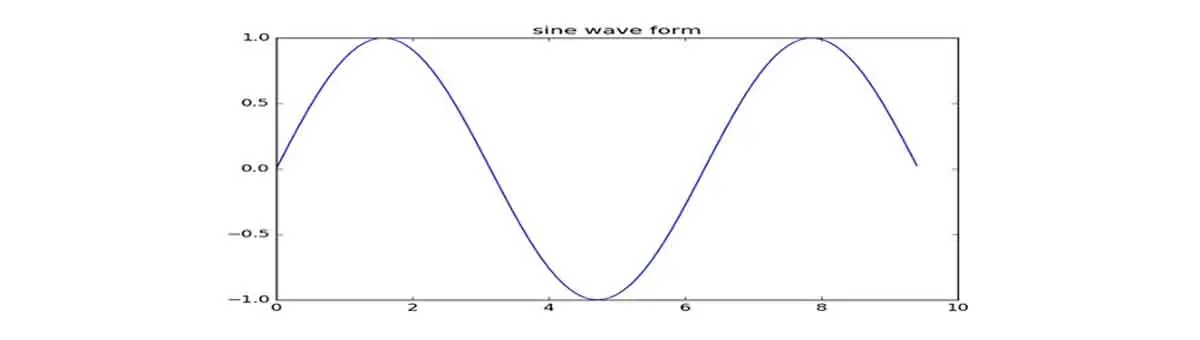

Matplotlib Example

import numpy as np

import matplotlib.pyplot as plt

# Compute the x and y coordinates for points on a sine curves.

x = np.arange(0, 3 * np.pi, 0.1)

y = np.sin(x)

plt.title(“sine wave form”)

# That will plot the points using matplotlib

plt.plot(x, y)

plt.show()

Machine Learning.

5. SciKit-Learn

Scikits are additional packages of SciPy Stack designed for specific functionalities like image processing and machine learning facilitation. In the regard of the latter, one of the most prominent of these packages is scikit-learn. The package is built on the top of SciPy and makes heavy use of its math operations.

The scikit-learn exposes a concise and consistent interface to the common machine learning algorithms, making it simple to bring ML into production systems. The library combines quality code and good documentation, ease of use and high performance and is a de-facto industry standard for machine learning with Python.

Deep Learning — Keras / TensorFlow / Theano

In regard of Deep Learning, one of the most prominent and convenient libraries for Python in this field is Keras, which can function either on top of TensorFlow or Theano. Let’s reveal some details about all of them.

6. Theano

Theano is a Python package that defines multi-dimensional arrays similar to NumPy, along with math operations and expressions. The library is compiled, making it run efficiently on all architectures. Originally developed by the Machine Learning group of Université de Montréal, it is primarily used for the needs of Machine Learning.

The important thing to note is that Theano tightly integrates with NumPy on low-level of its operations. The library also optimizes the use of GPU and CPU, making the performance of data-intensive computation even faster.

Efficiency and stability tweaks allow for much more precise results with even very small values, for example, computation of log(1+x) will give cognizant results for even the smallest values of x.

7. TensorFlow.

Coming from developers at Google, it is an open-source library of data flow graphs computations, which are sharpened for Machine Learning. It was designed to meet the high-demand requirements of Google environment for training Neural Networks and is a successor of DistBelief, a Machine Learning system, based on Neural Networks. However, TensorFlow isn’t strictly for scientific use in border of Google — it is general enough to use it in a variety of real-world application.

The key feature of TensorFlow is their multi-layered nodes system that enables quick training of artificial neural networks on large datasets. This powers Google’s voice recognition and object identification from pictures.

8. Keras

The minimalistic approach in design aimed at fast and easy experimentation through the building of compact systems.

Keras is really eased to get started and keep going with quick prototyping. It is written in pure Python and high-level in its nature. It is highly modular and extendable. Notwithstanding its ease, simplicity, and high-level orientation, Keras is still deep and powerful enough for serious modeling.

The general idea of Keras is based on layers, and everything else is built around them. Data is prepared in tensors, the first layer is responsible for input of tensors, the last layer is responsible for output, and the model is built in between.

Data Mining

9. Scrapy

Scrapy is a library for making crawling programs, also known as spider bots, for retrieval of structured data, such as contact info or URLs, from the web.

It is open-source and written in Python. It was originally designed strictly for scraping, as its name indicates, but it has evolved in the full-fledged framework with the ability to gather data from APIs and act as general-purpose crawlers.

Features of Scrapy

● Scrapy is an open source and free to use web crawling framework.

● Scrapy generates feed exports in formats such as JSON, CSV, and XML.

● It is a cross-platform application framework (Windows, Linux, Mac OS and BSD).

● Scrapy comes with built-in service called Scrapyd which allows uploading projects and control spiders using JSON web service.

● Scrapy has built-in support for selecting and extracting data from sources either by XPath or CSS expressions.

● Scrapy based on crawler, allows extracting data from web pages automatically.

Scrapy can be installed using pip. To install, run the following command −

pip install Scrapy

Creating a Project

You can use the following command to create the project in Scrapy −

scrapy startproject project_name

This will create the project called project_name directory. Next, go to the newly created project, using the following command −

cd project_name

Controlling Projects

sprapy genspider mydomain mydomain.com

Scrapy will display the list of available commands as listed −

● fetch − It fetches the URL using Scrapy downloader.

● runspider − It is used to run self-contained spider without creating a project.

● settings − It specifies the project setting value.

● shell − It is an interactive scraping module for the given URL.

● startproject − It creates a new Scrapy project.

● version − It displays the Scrapy version.

● view − It fetches the URL using Scrapy downloader and show the contents in a browser.

You can have some project related commands as listed −

● crawl − It is used to crawl data using the spider.

● check − It checks the items returned by the crawled command.

● list − It displays the list of available spiders present in the project.

● edit − You can edit the spiders by using the editor.

● parse − It parses the given URL with the spider.

● bench − It is used to run quick benchmark test (Benchmark tells how many number of pages can be crawled per minute by Scrapy).

Sample Code:

def parse(self, response):

for title in response.css(‘.post-header>h2’):

yield {‘title’: title.css(‘a ::text’).get()}

for next_page in response.css(‘a.next-posts-link’):

yield response.follow(next_page, self.parse)

EOF

To run script:-

scrapy runspider myspider.py

About Author:

Ashish Kumar Gupta is a Sr. Python and JavaScript fullStack developer. Currently he’s working with QSS Technosoft as Technical Lead position. He is a technology writer in, Python, JavaScript, Django, TornadoWeb, Flask, Reactjs, Nodejs, RabbitMq, Mqtt broker, Graphql, Redis, Memcached, Celery, Scapy, AWS, Google Cloud, Kibana, Databases (Mysql, SqlServer, Mongodb, Sqlite) and web search engine development using Apache Solr and ElasticSearch.

About QSS:

QSS Technosoft is a global provider of high-quality software development services delivering world-class software solutions to SMEs focused on data analytics, big data, process automation, desktop and web solutions, e-commerce solutions, mobile applications & Digital marketing services. We have grown organically to a team of over 125+ skilled professionals. QSS provides industry-specific and niche technology solutions for businesses. Our identity is founded on a deep-rooted culture of excellence and a continued commitment to delivering high-quality software services to our customers. We take pride in our ability to deliver excellent software solutions to our clients around the world.

Top 9 Python libraries for Data Science and Machine Learning